Getting Started With Quartz.Net: Part 4 – Configuring Jobs

Part 3 of this series describes how to configure a job to run on Quartz.Net, but it does not go into detail about what each of the job settings does. This post will cover configuring jobs in detail and will provide some examples of job configurations.

Most of the information we will be covering is available in the documentation for Quartz.Net documentation for JobDetail.

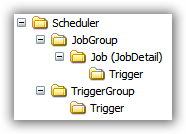

First, let’s take a quick look at how things are organized internally. This will help you understand at a high level how Quartz works inside (from an API point of view), without going into great detail into the object model. I’ll use the folder metaphor to simplify things, but keep in mind that the Quartz.net object model is not completely hierarchical. This folder based image is a simplified representation that you can refer to while reading the explanation below:

The highest level item we’ll look at is the scheduler. The scheduler manages pretty much everything, and at a high level, it works with Jobs, or more specifically, JobDetails which it organizes into groups. So, a Scheduler has JobGroups, which in turn contain jobs (JobDetails). Finally, a job can have zero or more triggers. We’re not going to spend too much time looking at triggers (that is the subject of the next post) but, we’ll cover the basics here.

By giving a job a name and a group, you are uniquely identifying it. Within a job group, job names must be unique. If you look at the configuration file we covered in the previous post, the first 2 fields (name and group) are what we are talking about here. The third field, description, is useful for explaining what a job is supposed to do.

Triggers are organized in groups as well, and like jobs, a trigger’s name must be unique within a group. Unlike jobs, triggers can only be assigned to one job. To summarize, while a job can have multiple triggers, a trigger can only have one job.

Now let’s look at the rest of the job parameters, starting with the JobType. As its name states, this is basically the Type (as in .Net Type) that the scheduler should create to run the job. In the xml it is described by first putting the fully qualified type name and then, separated by a comma, the assembly name (without the .dll extension).

The description of the volatile parameter has a double negative in the documentation description, so I’ll try to rephrase it in a way that is a bit less confusing. If you set the volatile parameter to true, the job will not be persisted in the job store when the scheduler is shut down. If you set the volatile parameter to false, the job will get persisted in the job store. Note, however, that if you are using the AdoJobStore, the job will get persisted regardless of the value of this parameter. Also consider the fact that if you are using the in memory data store… no data will get persisted and you will have to re-schedule all the jobs upon start up.

The durable description is easier to understand. Setting this to true means that the job will be kept around even if it doesn’t have any triggers pointing to it. If it is set to false, the job gets deleted if it doesn’t have any triggers pointing to it.

Next up is the recover (RequestsRecovery) parameter. This parameter tells the scheduler whether it should try to recover (re-execute) the job if something goes wrong with the scheduler itself while the job was running.

The last parameter, or list of parameters that we’ll discuss is the JobDataMap. In short, this map is used to pass data to the job. Think of it as a collection of name value pairs that you can consume within your job. In the default quartz_jobs.xml, the data contained in the map is what you would expect to find if you wanted to invoke a command line program: the executable name and the arguments to pass to the executable. For the NativeJob itself, there are 2 parameters that you need to be aware of: the waitForProcess parameter (boolean) and consumeStreams (boolean) .

The WaitForProcess parameter tells the NativeJob whether to wait for the process to finish before considering the Job complete.

The ConsumeStreams parameters should be set to true if your executable produces any output to stderr or stdout. Otherwise, the process might hang. Any output produced by the executable is piped to the event log (if you are using the default configuration).

That’s it for this post. Hopefully this give you a more in depth explanation of how to configure Quartz.Net jobs. In Part 5, we’ll discuss trigger configuration.

Most of the information we will be covering is available in the documentation for Quartz.Net documentation for JobDetail.

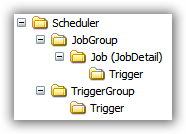

First, let’s take a quick look at how things are organized internally. This will help you understand at a high level how Quartz works inside (from an API point of view), without going into great detail into the object model. I’ll use the folder metaphor to simplify things, but keep in mind that the Quartz.net object model is not completely hierarchical. This folder based image is a simplified representation that you can refer to while reading the explanation below:

The highest level item we’ll look at is the scheduler. The scheduler manages pretty much everything, and at a high level, it works with Jobs, or more specifically, JobDetails which it organizes into groups. So, a Scheduler has JobGroups, which in turn contain jobs (JobDetails). Finally, a job can have zero or more triggers. We’re not going to spend too much time looking at triggers (that is the subject of the next post) but, we’ll cover the basics here.

By giving a job a name and a group, you are uniquely identifying it. Within a job group, job names must be unique. If you look at the configuration file we covered in the previous post, the first 2 fields (name and group) are what we are talking about here. The third field, description, is useful for explaining what a job is supposed to do.

Triggers are organized in groups as well, and like jobs, a trigger’s name must be unique within a group. Unlike jobs, triggers can only be assigned to one job. To summarize, while a job can have multiple triggers, a trigger can only have one job.

Now let’s look at the rest of the job parameters, starting with the JobType. As its name states, this is basically the Type (as in .Net Type) that the scheduler should create to run the job. In the xml it is described by first putting the fully qualified type name and then, separated by a comma, the assembly name (without the .dll extension).

The description of the volatile parameter has a double negative in the documentation description, so I’ll try to rephrase it in a way that is a bit less confusing. If you set the volatile parameter to true, the job will not be persisted in the job store when the scheduler is shut down. If you set the volatile parameter to false, the job will get persisted in the job store. Note, however, that if you are using the AdoJobStore, the job will get persisted regardless of the value of this parameter. Also consider the fact that if you are using the in memory data store… no data will get persisted and you will have to re-schedule all the jobs upon start up.

The durable description is easier to understand. Setting this to true means that the job will be kept around even if it doesn’t have any triggers pointing to it. If it is set to false, the job gets deleted if it doesn’t have any triggers pointing to it.

Next up is the recover (RequestsRecovery) parameter. This parameter tells the scheduler whether it should try to recover (re-execute) the job if something goes wrong with the scheduler itself while the job was running.

The last parameter, or list of parameters that we’ll discuss is the JobDataMap. In short, this map is used to pass data to the job. Think of it as a collection of name value pairs that you can consume within your job. In the default quartz_jobs.xml, the data contained in the map is what you would expect to find if you wanted to invoke a command line program: the executable name and the arguments to pass to the executable. For the NativeJob itself, there are 2 parameters that you need to be aware of: the waitForProcess parameter (boolean) and consumeStreams (boolean) .

The WaitForProcess parameter tells the NativeJob whether to wait for the process to finish before considering the Job complete.

The ConsumeStreams parameters should be set to true if your executable produces any output to stderr or stdout. Otherwise, the process might hang. Any output produced by the executable is piped to the event log (if you are using the default configuration).

That’s it for this post. Hopefully this give you a more in depth explanation of how to configure Quartz.Net jobs. In Part 5, we’ll discuss trigger configuration.

This post appeared first at jayvilalta.com